%pip install -q validmindValidMind for model validation 1 — Set up the ValidMind Library for validation

Learn how to use ValidMind for your end-to-end model validation process based on common scenarios with our series of four introductory notebooks. In this first notebook, set up the ValidMind Library in preparation for validating a champion model.

These notebooks use a binary classification model as an example, but the same principles shown here apply to other model types.

Our course tailor-made for validators new to ValidMind combines this series of notebooks with more a more in-depth introduction to the ValidMind Platform — Validator Fundamentals

Introduction

Model validation aims to independently assess the compliance of champion models created by model developers with regulatory guidance by conducting thorough testing and analysis, potentially including the use of challenger models to benchmark performance. Assessments, presented in the form of a validation report, typically include artifacts (findings) and recommendations to address those issues.

A binary classification model is a type of predictive model used in churn analysis to identify customers who are likely to leave a service or subscription by analyzing various behavioral, transactional, and demographic factors.

- This model helps businesses take proactive measures to retain at-risk customers by offering personalized incentives, improving customer service, or adjusting pricing strategies.

- Effective validation of a churn prediction model ensures that businesses can accurately identify potential churners, optimize retention efforts, and enhance overall customer satisfaction while minimizing revenue loss.

About ValidMind

ValidMind is a suite of tools for managing model risk, including risk associated with AI and statistical models.

You use the ValidMind Library to automate comparison and other validation tests, and then use the ValidMind Platform to submit compliance assessments of champion models via comprehensive validation reports. Together, these products simplify model risk management, facilitate compliance with regulations and institutional standards, and enhance collaboration between yourself and model developers.

Before you begin

This notebook assumes you have basic familiarity with Python, including an understanding of how functions work. If you are new to Python, you can still run the notebook but we recommend further familiarizing yourself with the language.

If you encounter errors due to missing modules in your Python environment, install the modules with pip install, and then re-run the notebook. For more help, refer to Installing Python Modules.

New to ValidMind?

If you haven't already seen our documentation on the ValidMind Library, we recommend you begin by exploring the available resources in this section. There, you can learn more about documenting models and running tests, as well as find code samples and our Python Library API reference.

Register with ValidMind

Key concepts

Validation report: A comprehensive and structured assessment of a model’s development and performance, focusing on verifying its integrity, appropriateness, and alignment with its intended use. It includes analyses of model assumptions, data quality, performance metrics, outcomes of testing procedures, and risk considerations. The validation report supports transparency, regulatory compliance, and informed decision-making by documenting the validator’s independent review and conclusions.

Validation report template: Serves as a standardized framework for conducting and documenting model validation activities. It outlines the required sections, recommended analyses, and expected validation tests, ensuring consistency and completeness across validation reports. The template helps guide validators through a systematic review process while promoting comparability and traceability of validation outcomes.

Tests: A function contained in the ValidMind Library, designed to run a specific quantitative test on the dataset or model. Tests are the building blocks of ValidMind, used to evaluate and document models and datasets.

Metrics: A subset of tests that do not have thresholds. In the context of this notebook, metrics and tests can be thought of as interchangeable concepts.

Custom metrics: Custom metrics are functions that you define to evaluate your model or dataset. These functions can be registered with the ValidMind Library to be used in the ValidMind Platform.

Inputs: Objects to be evaluated and documented in the ValidMind Library. They can be any of the following:

- model: A single model that has been initialized in ValidMind with

vm.init_model(). - dataset: Single dataset that has been initialized in ValidMind with

vm.init_dataset(). - models: A list of ValidMind models - usually this is used when you want to compare multiple models in your custom metric.

- datasets: A list of ValidMind datasets - usually this is used when you want to compare multiple datasets in your custom metric. (Learn more: Run tests with multiple datasets)

Parameters: Additional arguments that can be passed when running a ValidMind test, used to pass additional information to a metric, customize its behavior, or provide additional context.

Outputs: Custom metrics can return elements like tables or plots. Tables may be a list of dictionaries (each representing a row) or a pandas DataFrame. Plots may be matplotlib or plotly figures.

Setting up

Register a sample model

In a usual model lifecycle, a champion model will have been independently registered in your model inventory and submitted to you for validation by your model development team as part of the effective challenge process. (Learn more: Submit for approval)

For this notebook, we'll have you register a dummy model in the ValidMind Platform inventory and assign yourself as the validator to familiarize you with the ValidMind interface and circumvent the need for an existing model:

In a browser, log in to ValidMind.

In the left sidebar, navigate to Inventory and click + Register Model.

Enter the model details and click Next > to continue to assignment of model stakeholders. (Need more help?)

Select your own name under the MODEL OWNER drop-down — don’t worry, we’ll adjust these permissions next for validation.

Click Register Model to add the model to your inventory.

Assign validator credentials

In order to log tests as a validator instead of as a developer, on the model details page that appears after you've successfully registered your sample model:

Remove yourself as a model owner:

- Click on the OWNERS tile.

- Click the x next to your name to remove yourself from that model's role.

- Click Save to apply your changes to that role.

Remove yourself as a developer:

- Click on the DEVELOPERS tile.

- Click the x next to your name to remove yourself from that model's role.

- Click Save to apply your changes to that role.

Add yourself as a validator:

- Click on the VALIDATORS tile.

- Select your name from the drop-down menu.

- Click Save to apply your changes to that role.

Apply documentation template

Once you've registered your model, let's select a documentation template. A template predefines sections for your model documentation and provides a general outline to follow, making the documentation process much easier for developers.

We'll need this documentation template later for reference as we draft our validation report:

In the left sidebar that appears for your model, click Documents and select Documentation.

Under TEMPLATE, select

Binary classification.Click Use Template to apply the template.

Apply validation report template

Next, let's select a validation report template. A template predefines sections for your report and provides a general outline to follow, making the validation process much easier.

In the left sidebar that appears for your model, click Documents and select Documentation.

Under TEMPLATE, select

Generic Validation Report.Click Use Template to apply the template.

Install the ValidMind Library

Python 3.8 <= x <= 3.11

To install the library:

Initialize the ValidMind Library

ValidMind generates a unique code snippet for each registered model to connect with your validation environment. You initialize the ValidMind Library with this code snippet, which ensures that your test results are uploaded to the correct model when you run the notebook.

Get your code snippet

In a browser, log in to ValidMind.

In the left sidebar, navigate to Inventory and select the model you registered for this "ValidMind for model validation" series of notebooks.

Go to Getting Started and click Copy snippet to clipboard.

Next, load your model identifier credentials from an .env file or replace the placeholder with your own code snippet:

# Load your model identifier credentials from an `.env` file

%load_ext dotenv

%dotenv .env

# Or replace with your code snippet

import validmind as vm

vm.init(

# api_host="...",

# api_key="...",

# api_secret="...",

# model="...",

)Getting to know ValidMind

Preview the validation report template

Let's verify that you have connected the ValidMind Library to the ValidMind Platform and that the appropriate template is selected for model validation. A template predefines sections for your validation report and provides a general outline to follow, making the validation process much easier.

You will attach evidence to this template in the form of risk assessment notes, artifacts, and test results later on. For now, take a look at the default structure that the template provides with the vm.preview_template() function from the ValidMind library:

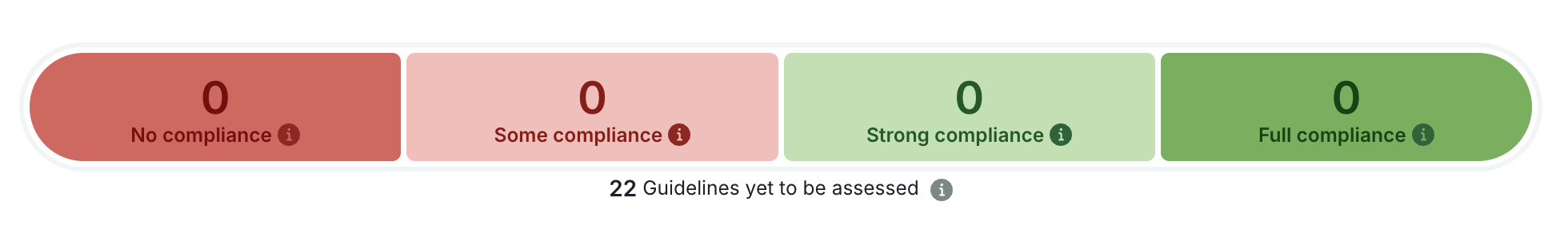

vm.preview_template()View validation report in the ValidMind Platform

Next, let's head to the ValidMind Platform to see the template in action:

In a browser, log in to ValidMind.

In the left sidebar, navigate to Inventory and select the model you registered for this "ValidMind for model validation" series of notebooks.

Click Validation Report under Documents for your model and note:

Explore available tests

Next, let's explore the list of all available tests in the ValidMind Library with the vm.tests.list_tests() function — we'll later narrow down the tests we want to run from this list when we learn to run tests.

vm.tests.list_tests()Upgrade ValidMind

Retrieve the information for the currently installed version of ValidMind:

%pip show validmindIf the version returned is lower than the version indicated in our production open-source code, restart your notebook and run:

%pip install --upgrade validmindYou may need to restart your kernel after running the upgrade package for changes to be applied.

In summary

In this first notebook, you learned how to:

Next steps

Start the model validation process

Now that the ValidMind Library is connected to your model in the ValidMind Library with the correct template applied, we can go ahead and start the model validation process: 2 — Start the model validation process

Copyright © 2023-2026 ValidMind Inc. All rights reserved.

Refer to LICENSE for details.

SPDX-License-Identifier: AGPL-3.0 AND ValidMind Commercial