%pip install -q validmindExplore test suites

Explore ValidMind test suites, pre-built collections of related tests used to evaluate specific aspects of your model. Retrieve available test suites and details for tests within a suite to understand their functionality, allowing you to select the appropriate test suites for your use cases.

About ValidMind

ValidMind is a suite of tools for managing model risk, including risk associated with AI and statistical models.

You use the ValidMind Library to automate documentation and validation tests, and then use the ValidMind Platform to collaborate on model documentation. Together, these products simplify model risk management, facilitate compliance with regulations and institutional standards, and enhance collaboration between yourself and model validators.

Before you begin

This notebook assumes you have basic familiarity with Python, including an understanding of how functions work. If you are new to Python, you can still run the notebook but we recommend further familiarizing yourself with the language.

If you encounter errors due to missing modules in your Python environment, install the modules with pip install, and then re-run the notebook. For more help, refer to Installing Python Modules.

New to ValidMind?

If you haven't already seen our documentation on the ValidMind Library, we recommend you begin by exploring the available resources in this section. There, you can learn more about documenting models and running tests, as well as find code samples and our Python Library API reference.

Register with ValidMind

Key concepts

Model documentation: A structured and detailed record pertaining to a model, encompassing key components such as its underlying assumptions, methodologies, data sources, inputs, performance metrics, evaluations, limitations, and intended uses. It serves to ensure transparency, adherence to regulatory requirements, and a clear understanding of potential risks associated with the model’s application.

Documentation template: Functions as a test suite and lays out the structure of model documentation, segmented into various sections and sub-sections. Documentation templates define the structure of your model documentation, specifying the tests that should be run, and how the results should be displayed.

Tests: A function contained in the ValidMind Library, designed to run a specific quantitative test on the dataset or model. Tests are the building blocks of ValidMind, used to evaluate and document models and datasets, and can be run individually or as part of a suite defined by your model documentation template.

Custom tests: Custom tests are functions that you define to evaluate your model or dataset. These functions can be registered via the ValidMind Library to be used with the ValidMind Platform.

Inputs: Objects to be evaluated and documented in the ValidMind Library. They can be any of the following:

- model: A single model that has been initialized in ValidMind with

vm.init_model(). - dataset: Single dataset that has been initialized in ValidMind with

vm.init_dataset(). - models: A list of ValidMind models - usually this is used when you want to compare multiple models in your custom test.

- datasets: A list of ValidMind datasets - usually this is used when you want to compare multiple datasets in your custom test. See this example for more information.

Parameters: Additional arguments that can be passed when running a ValidMind test, used to pass additional information to a test, customize its behavior, or provide additional context.

Outputs: Custom tests can return elements like tables or plots. Tables may be a list of dictionaries (each representing a row) or a pandas DataFrame. Plots may be matplotlib or plotly figures.

Test suites: Collections of tests designed to run together to automate and generate model documentation end-to-end for specific use-cases.

Example: the classifier_full_suite test suite runs tests from the tabular_dataset and classifier test suites to fully document the data and model sections for binary classification model use-cases.

Install the ValidMind Library

Python 3.8 <= x <= 3.11

To install the library:

List available test suites

After we import the ValidMind Library, we'll call test_suites.list_suites() to retrieve a structured list of all available test suites, that includes each suite's name, description, and associated tests:

import validmind as vm

vm.test_suites.list_suites()| ID | Name | Description | Tests |

|---|---|---|---|

| classifier_model_diagnosis | ClassifierDiagnosis | Test suite for sklearn classifier model diagnosis tests | validmind.model_validation.sklearn.OverfitDiagnosis, validmind.model_validation.sklearn.WeakspotsDiagnosis, validmind.model_validation.sklearn.RobustnessDiagnosis |

| classifier_full_suite | ClassifierFullSuite | Full test suite for binary classification models. | validmind.data_validation.DatasetDescription, validmind.data_validation.DescriptiveStatistics, validmind.data_validation.PearsonCorrelationMatrix, validmind.data_validation.ClassImbalance, validmind.data_validation.Duplicates, validmind.data_validation.HighCardinality, validmind.data_validation.HighPearsonCorrelation, validmind.data_validation.MissingValues, validmind.data_validation.Skewness, validmind.data_validation.UniqueRows, validmind.data_validation.TooManyZeroValues, validmind.model_validation.ModelMetadata, validmind.data_validation.DatasetSplit, validmind.model_validation.sklearn.ConfusionMatrix, validmind.model_validation.sklearn.ClassifierPerformance, validmind.model_validation.sklearn.PermutationFeatureImportance, validmind.model_validation.sklearn.PrecisionRecallCurve, validmind.model_validation.sklearn.ROCCurve, validmind.model_validation.sklearn.PopulationStabilityIndex, validmind.model_validation.sklearn.SHAPGlobalImportance, validmind.model_validation.sklearn.MinimumAccuracy, validmind.model_validation.sklearn.MinimumF1Score, validmind.model_validation.sklearn.MinimumROCAUCScore, validmind.model_validation.sklearn.TrainingTestDegradation, validmind.model_validation.sklearn.ModelsPerformanceComparison, validmind.model_validation.sklearn.OverfitDiagnosis, validmind.model_validation.sklearn.WeakspotsDiagnosis, validmind.model_validation.sklearn.RobustnessDiagnosis |

| classifier_metrics | ClassifierMetrics | Test suite for sklearn classifier metrics | validmind.model_validation.ModelMetadata, validmind.data_validation.DatasetSplit, validmind.model_validation.sklearn.ConfusionMatrix, validmind.model_validation.sklearn.ClassifierPerformance, validmind.model_validation.sklearn.PermutationFeatureImportance, validmind.model_validation.sklearn.PrecisionRecallCurve, validmind.model_validation.sklearn.ROCCurve, validmind.model_validation.sklearn.PopulationStabilityIndex, validmind.model_validation.sklearn.SHAPGlobalImportance |

| classifier_model_validation | ClassifierModelValidation | Test suite for binary classification models. | validmind.model_validation.ModelMetadata, validmind.data_validation.DatasetSplit, validmind.model_validation.sklearn.ConfusionMatrix, validmind.model_validation.sklearn.ClassifierPerformance, validmind.model_validation.sklearn.PermutationFeatureImportance, validmind.model_validation.sklearn.PrecisionRecallCurve, validmind.model_validation.sklearn.ROCCurve, validmind.model_validation.sklearn.PopulationStabilityIndex, validmind.model_validation.sklearn.SHAPGlobalImportance, validmind.model_validation.sklearn.MinimumAccuracy, validmind.model_validation.sklearn.MinimumF1Score, validmind.model_validation.sklearn.MinimumROCAUCScore, validmind.model_validation.sklearn.TrainingTestDegradation, validmind.model_validation.sklearn.ModelsPerformanceComparison, validmind.model_validation.sklearn.OverfitDiagnosis, validmind.model_validation.sklearn.WeakspotsDiagnosis, validmind.model_validation.sklearn.RobustnessDiagnosis |

| classifier_validation | ClassifierPerformance | Test suite for sklearn classifier models | validmind.model_validation.sklearn.MinimumAccuracy, validmind.model_validation.sklearn.MinimumF1Score, validmind.model_validation.sklearn.MinimumROCAUCScore, validmind.model_validation.sklearn.TrainingTestDegradation, validmind.model_validation.sklearn.ModelsPerformanceComparison |

| cluster_full_suite | ClusterFullSuite | Full test suite for clustering models. | validmind.model_validation.ModelMetadata, validmind.data_validation.DatasetSplit, validmind.model_validation.sklearn.HomogeneityScore, validmind.model_validation.sklearn.CompletenessScore, validmind.model_validation.sklearn.VMeasure, validmind.model_validation.sklearn.AdjustedRandIndex, validmind.model_validation.sklearn.AdjustedMutualInformation, validmind.model_validation.sklearn.FowlkesMallowsScore, validmind.model_validation.sklearn.ClusterPerformanceMetrics, validmind.model_validation.sklearn.ClusterCosineSimilarity, validmind.model_validation.sklearn.SilhouettePlot, validmind.model_validation.ClusterSizeDistribution, validmind.model_validation.sklearn.HyperParametersTuning, validmind.model_validation.sklearn.KMeansClustersOptimization |

| cluster_metrics | ClusterMetrics | Test suite for sklearn clustering metrics | validmind.model_validation.ModelMetadata, validmind.data_validation.DatasetSplit, validmind.model_validation.sklearn.HomogeneityScore, validmind.model_validation.sklearn.CompletenessScore, validmind.model_validation.sklearn.VMeasure, validmind.model_validation.sklearn.AdjustedRandIndex, validmind.model_validation.sklearn.AdjustedMutualInformation, validmind.model_validation.sklearn.FowlkesMallowsScore, validmind.model_validation.sklearn.ClusterPerformanceMetrics, validmind.model_validation.sklearn.ClusterCosineSimilarity, validmind.model_validation.sklearn.SilhouettePlot |

| cluster_performance | ClusterPerformance | Test suite for sklearn cluster performance | validmind.model_validation.ClusterSizeDistribution |

| embeddings_full_suite | EmbeddingsFullSuite | Full test suite for embeddings models. | validmind.model_validation.ModelMetadata, validmind.data_validation.DatasetSplit, validmind.model_validation.embeddings.DescriptiveAnalytics, validmind.model_validation.embeddings.CosineSimilarityDistribution, validmind.model_validation.embeddings.ClusterDistribution, validmind.model_validation.embeddings.EmbeddingsVisualization2D, validmind.model_validation.embeddings.StabilityAnalysisRandomNoise, validmind.model_validation.embeddings.StabilityAnalysisSynonyms, validmind.model_validation.embeddings.StabilityAnalysisKeyword, validmind.model_validation.embeddings.StabilityAnalysisTranslation |

| embeddings_metrics | EmbeddingsMetrics | Test suite for embeddings metrics | validmind.model_validation.ModelMetadata, validmind.data_validation.DatasetSplit, validmind.model_validation.embeddings.DescriptiveAnalytics, validmind.model_validation.embeddings.CosineSimilarityDistribution, validmind.model_validation.embeddings.ClusterDistribution, validmind.model_validation.embeddings.EmbeddingsVisualization2D |

| embeddings_model_performance | EmbeddingsPerformance | Test suite for embeddings model performance | validmind.model_validation.embeddings.StabilityAnalysisRandomNoise, validmind.model_validation.embeddings.StabilityAnalysisSynonyms, validmind.model_validation.embeddings.StabilityAnalysisKeyword, validmind.model_validation.embeddings.StabilityAnalysisTranslation |

| hyper_parameters_optimization | KmeansParametersOptimization | Test suite for sklearn hyperparameters optimization | validmind.model_validation.sklearn.HyperParametersTuning, validmind.model_validation.sklearn.KMeansClustersOptimization |

| llm_classifier_full_suite | LLMClassifierFullSuite | Full test suite for LLM classification models. | validmind.data_validation.ClassImbalance, validmind.data_validation.Duplicates, validmind.data_validation.nlp.StopWords, validmind.data_validation.nlp.Punctuations, validmind.data_validation.nlp.CommonWords, validmind.data_validation.nlp.TextDescription, validmind.model_validation.ModelMetadata, validmind.data_validation.DatasetSplit, validmind.model_validation.sklearn.ConfusionMatrix, validmind.model_validation.sklearn.ClassifierPerformance, validmind.model_validation.sklearn.PermutationFeatureImportance, validmind.model_validation.sklearn.PrecisionRecallCurve, validmind.model_validation.sklearn.ROCCurve, validmind.model_validation.sklearn.PopulationStabilityIndex, validmind.model_validation.sklearn.SHAPGlobalImportance, validmind.model_validation.sklearn.MinimumAccuracy, validmind.model_validation.sklearn.MinimumF1Score, validmind.model_validation.sklearn.MinimumROCAUCScore, validmind.model_validation.sklearn.TrainingTestDegradation, validmind.model_validation.sklearn.ModelsPerformanceComparison, validmind.model_validation.sklearn.OverfitDiagnosis, validmind.model_validation.sklearn.WeakspotsDiagnosis, validmind.model_validation.sklearn.RobustnessDiagnosis, validmind.prompt_validation.Bias, validmind.prompt_validation.Clarity, validmind.prompt_validation.Conciseness, validmind.prompt_validation.Delimitation, validmind.prompt_validation.NegativeInstruction, validmind.prompt_validation.Robustness, validmind.prompt_validation.Specificity |

| prompt_validation | PromptValidation | Test suite for prompt validation | validmind.prompt_validation.Bias, validmind.prompt_validation.Clarity, validmind.prompt_validation.Conciseness, validmind.prompt_validation.Delimitation, validmind.prompt_validation.NegativeInstruction, validmind.prompt_validation.Robustness, validmind.prompt_validation.Specificity |

| nlp_classifier_full_suite | NLPClassifierFullSuite | Full test suite for NLP classification models. | validmind.data_validation.ClassImbalance, validmind.data_validation.Duplicates, validmind.data_validation.nlp.StopWords, validmind.data_validation.nlp.Punctuations, validmind.data_validation.nlp.CommonWords, validmind.data_validation.nlp.TextDescription, validmind.model_validation.ModelMetadata, validmind.data_validation.DatasetSplit, validmind.model_validation.sklearn.ConfusionMatrix, validmind.model_validation.sklearn.ClassifierPerformance, validmind.model_validation.sklearn.PermutationFeatureImportance, validmind.model_validation.sklearn.PrecisionRecallCurve, validmind.model_validation.sklearn.ROCCurve, validmind.model_validation.sklearn.PopulationStabilityIndex, validmind.model_validation.sklearn.SHAPGlobalImportance, validmind.model_validation.sklearn.MinimumAccuracy, validmind.model_validation.sklearn.MinimumF1Score, validmind.model_validation.sklearn.MinimumROCAUCScore, validmind.model_validation.sklearn.TrainingTestDegradation, validmind.model_validation.sklearn.ModelsPerformanceComparison, validmind.model_validation.sklearn.OverfitDiagnosis, validmind.model_validation.sklearn.WeakspotsDiagnosis, validmind.model_validation.sklearn.RobustnessDiagnosis |

| regression_metrics | RegressionMetrics | Test suite for performance metrics of regression metrics | validmind.data_validation.DatasetSplit, validmind.model_validation.ModelMetadata, validmind.model_validation.sklearn.PermutationFeatureImportance |

| regression_model_description | RegressionModelDescription | Test suite for performance metric of regression model of statsmodels library | validmind.data_validation.DatasetSplit, validmind.model_validation.ModelMetadata |

| regression_models_evaluation | RegressionModelsEvaluation | Test suite for metrics comparison of regression model of statsmodels library | validmind.model_validation.statsmodels.RegressionModelCoeffs, validmind.model_validation.sklearn.RegressionModelsPerformanceComparison |

| regression_full_suite | RegressionFullSuite | Full test suite for regression models. | validmind.data_validation.DatasetDescription, validmind.data_validation.DescriptiveStatistics, validmind.data_validation.PearsonCorrelationMatrix, validmind.data_validation.ClassImbalance, validmind.data_validation.Duplicates, validmind.data_validation.HighCardinality, validmind.data_validation.HighPearsonCorrelation, validmind.data_validation.MissingValues, validmind.data_validation.Skewness, validmind.data_validation.UniqueRows, validmind.data_validation.TooManyZeroValues, validmind.data_validation.DatasetSplit, validmind.model_validation.ModelMetadata, validmind.model_validation.sklearn.PermutationFeatureImportance, validmind.model_validation.sklearn.RegressionErrors, validmind.model_validation.sklearn.RegressionR2Square |

| regression_performance | RegressionPerformance | Test suite for regression model performance | validmind.model_validation.sklearn.RegressionErrors, validmind.model_validation.sklearn.RegressionR2Square |

| summarization_metrics | SummarizationMetrics | Test suite for Summarization metrics | validmind.model_validation.TokenDisparity, validmind.model_validation.BleuScore, validmind.model_validation.BertScore, validmind.model_validation.ContextualRecall |

| tabular_dataset | TabularDataset | Test suite for tabular datasets. | validmind.data_validation.DatasetDescription, validmind.data_validation.DescriptiveStatistics, validmind.data_validation.PearsonCorrelationMatrix, validmind.data_validation.ClassImbalance, validmind.data_validation.Duplicates, validmind.data_validation.HighCardinality, validmind.data_validation.HighPearsonCorrelation, validmind.data_validation.MissingValues, validmind.data_validation.Skewness, validmind.data_validation.UniqueRows, validmind.data_validation.TooManyZeroValues |

| tabular_dataset_description | TabularDatasetDescription | Test suite to extract metadata and descriptive statistics from a tabular dataset | validmind.data_validation.DatasetDescription, validmind.data_validation.DescriptiveStatistics, validmind.data_validation.PearsonCorrelationMatrix |

| tabular_data_quality | TabularDataQuality | Test suite for data quality on tabular datasets | validmind.data_validation.ClassImbalance, validmind.data_validation.Duplicates, validmind.data_validation.HighCardinality, validmind.data_validation.HighPearsonCorrelation, validmind.data_validation.MissingValues, validmind.data_validation.Skewness, validmind.data_validation.UniqueRows, validmind.data_validation.TooManyZeroValues |

| text_data_quality | TextDataQuality | Test suite for data quality on text data | validmind.data_validation.ClassImbalance, validmind.data_validation.Duplicates, validmind.data_validation.nlp.StopWords, validmind.data_validation.nlp.Punctuations, validmind.data_validation.nlp.CommonWords, validmind.data_validation.nlp.TextDescription |

| time_series_data_quality | TimeSeriesDataQuality | Test suite for data quality on time series datasets | validmind.data_validation.TimeSeriesOutliers, validmind.data_validation.TimeSeriesMissingValues, validmind.data_validation.TimeSeriesFrequency |

| time_series_dataset | TimeSeriesDataset | Test suite for time series datasets. | validmind.data_validation.TimeSeriesOutliers, validmind.data_validation.TimeSeriesMissingValues, validmind.data_validation.TimeSeriesFrequency, validmind.data_validation.TimeSeriesLinePlot, validmind.data_validation.TimeSeriesHistogram, validmind.data_validation.ACFandPACFPlot, validmind.data_validation.SeasonalDecompose, validmind.data_validation.AutoSeasonality, validmind.data_validation.AutoStationarity, validmind.data_validation.RollingStatsPlot, validmind.data_validation.AutoAR, validmind.data_validation.AutoMA, validmind.data_validation.ScatterPlot, validmind.data_validation.LaggedCorrelationHeatmap, validmind.data_validation.EngleGrangerCoint, validmind.data_validation.SpreadPlot |

| time_series_model_validation | TimeSeriesModelValidation | Test suite for time series model validation. | validmind.data_validation.DatasetSplit, validmind.model_validation.ModelMetadata, validmind.model_validation.statsmodels.RegressionModelCoeffs, validmind.model_validation.sklearn.RegressionModelsPerformanceComparison |

| time_series_multivariate | TimeSeriesMultivariate | This test suite provides a preliminary understanding of the features and relationship in multivariate dataset. It presents various multivariate visualizations that can help identify patterns, trends, and relationships between pairs of variables. The visualizations are designed to explore the relationships between multiple features simultaneously. They allow you to quickly identify any patterns or trends in the data, as well as any potential outliers or anomalies. The individual feature distribution can also be explored to provide insight into the range and frequency of values observed in the data. This multivariate analysis test suite aims to provide an overview of the data structure and guide further exploration and modeling. | validmind.data_validation.ScatterPlot, validmind.data_validation.LaggedCorrelationHeatmap, validmind.data_validation.EngleGrangerCoint, validmind.data_validation.SpreadPlot |

| time_series_univariate | TimeSeriesUnivariate | This test suite provides a preliminary understanding of the target variable(s) used in the time series dataset. It visualizations that present the raw time series data and a histogram of the target variable(s). The raw time series data provides a visual inspection of the target variable's behavior over time. This helps to identify any patterns or trends in the data, as well as any potential outliers or anomalies. The histogram of the target variable displays the distribution of values, providing insight into the range and frequency of values observed in the data. | validmind.data_validation.TimeSeriesLinePlot, validmind.data_validation.TimeSeriesHistogram, validmind.data_validation.ACFandPACFPlot, validmind.data_validation.SeasonalDecompose, validmind.data_validation.AutoSeasonality, validmind.data_validation.AutoStationarity, validmind.data_validation.RollingStatsPlot, validmind.data_validation.AutoAR, validmind.data_validation.AutoMA |

View test suite details

Use the test_suites.describe_suite() function to retrieve information about a test suite, including its name, description, and the list of tests it contains.

You can call test_suites.describe_suite() with just the test suite ID to get basic details, or pass an additional verbose parameter for a more comprehensive output:

- Test ID - The identifier of the test suite you want to inspect.

- Verbose - A Boolean flag. Set

verbose=Trueto return a full breakdown of the test suite.

vm.test_suites.describe_suite("classifier_full_suite", verbose=True)| Test Suite ID | Test Suite Name | Test Suite Section | Test ID | Test Name |

|---|---|---|---|---|

| classifier_full_suite | ClassifierFullSuite | tabular_dataset_description | validmind.data_validation.DatasetDescription | Dataset Description |

| classifier_full_suite | ClassifierFullSuite | tabular_dataset_description | validmind.data_validation.DescriptiveStatistics | Descriptive Statistics |

| classifier_full_suite | ClassifierFullSuite | tabular_dataset_description | validmind.data_validation.PearsonCorrelationMatrix | Pearson Correlation Matrix |

| classifier_full_suite | ClassifierFullSuite | tabular_data_quality | validmind.data_validation.ClassImbalance | Class Imbalance |

| classifier_full_suite | ClassifierFullSuite | tabular_data_quality | validmind.data_validation.Duplicates | Duplicates |

| classifier_full_suite | ClassifierFullSuite | tabular_data_quality | validmind.data_validation.HighCardinality | High Cardinality |

| classifier_full_suite | ClassifierFullSuite | tabular_data_quality | validmind.data_validation.HighPearsonCorrelation | High Pearson Correlation |

| classifier_full_suite | ClassifierFullSuite | tabular_data_quality | validmind.data_validation.MissingValues | Missing Values |

| classifier_full_suite | ClassifierFullSuite | tabular_data_quality | validmind.data_validation.Skewness | Skewness |

| classifier_full_suite | ClassifierFullSuite | tabular_data_quality | validmind.data_validation.UniqueRows | Unique Rows |

| classifier_full_suite | ClassifierFullSuite | tabular_data_quality | validmind.data_validation.TooManyZeroValues | Too Many Zero Values |

| classifier_full_suite | ClassifierFullSuite | classifier_metrics | validmind.model_validation.ModelMetadata | Model Metadata |

| classifier_full_suite | ClassifierFullSuite | classifier_metrics | validmind.data_validation.DatasetSplit | Dataset Split |

| classifier_full_suite | ClassifierFullSuite | classifier_metrics | validmind.model_validation.sklearn.ConfusionMatrix | Confusion Matrix |

| classifier_full_suite | ClassifierFullSuite | classifier_metrics | validmind.model_validation.sklearn.ClassifierPerformance | Classifier Performance |

| classifier_full_suite | ClassifierFullSuite | classifier_metrics | validmind.model_validation.sklearn.PermutationFeatureImportance | Permutation Feature Importance |

| classifier_full_suite | ClassifierFullSuite | classifier_metrics | validmind.model_validation.sklearn.PrecisionRecallCurve | Precision Recall Curve |

| classifier_full_suite | ClassifierFullSuite | classifier_metrics | validmind.model_validation.sklearn.ROCCurve | ROC Curve |

| classifier_full_suite | ClassifierFullSuite | classifier_metrics | validmind.model_validation.sklearn.PopulationStabilityIndex | Population Stability Index |

| classifier_full_suite | ClassifierFullSuite | classifier_metrics | validmind.model_validation.sklearn.SHAPGlobalImportance | SHAP Global Importance |

| classifier_full_suite | ClassifierFullSuite | classifier_validation | validmind.model_validation.sklearn.MinimumAccuracy | Minimum Accuracy |

| classifier_full_suite | ClassifierFullSuite | classifier_validation | validmind.model_validation.sklearn.MinimumF1Score | Minimum F1 Score |

| classifier_full_suite | ClassifierFullSuite | classifier_validation | validmind.model_validation.sklearn.MinimumROCAUCScore | Minimum ROCAUC Score |

| classifier_full_suite | ClassifierFullSuite | classifier_validation | validmind.model_validation.sklearn.TrainingTestDegradation | Training Test Degradation |

| classifier_full_suite | ClassifierFullSuite | classifier_validation | validmind.model_validation.sklearn.ModelsPerformanceComparison | Models Performance Comparison |

| classifier_full_suite | ClassifierFullSuite | classifier_model_diagnosis | validmind.model_validation.sklearn.OverfitDiagnosis | Overfit Diagnosis |

| classifier_full_suite | ClassifierFullSuite | classifier_model_diagnosis | validmind.model_validation.sklearn.WeakspotsDiagnosis | Weakspots Diagnosis |

| classifier_full_suite | ClassifierFullSuite | classifier_model_diagnosis | validmind.model_validation.sklearn.RobustnessDiagnosis | Robustness Diagnosis |

View test details

To inspect a specific test in a suite, pass the name of the test to tests.describe_test() to get detailed information about the test such as its purpose, strengths and limitations:

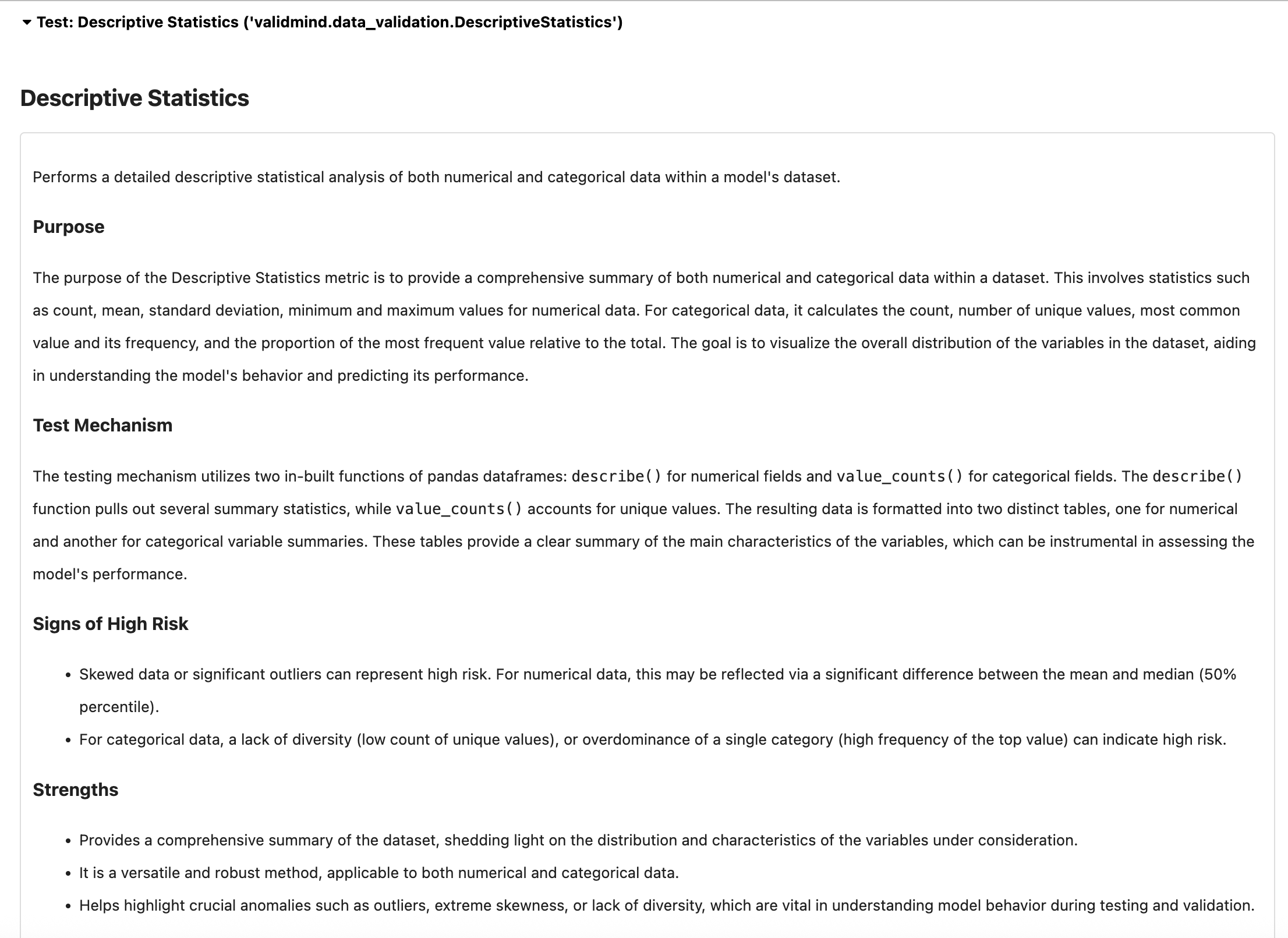

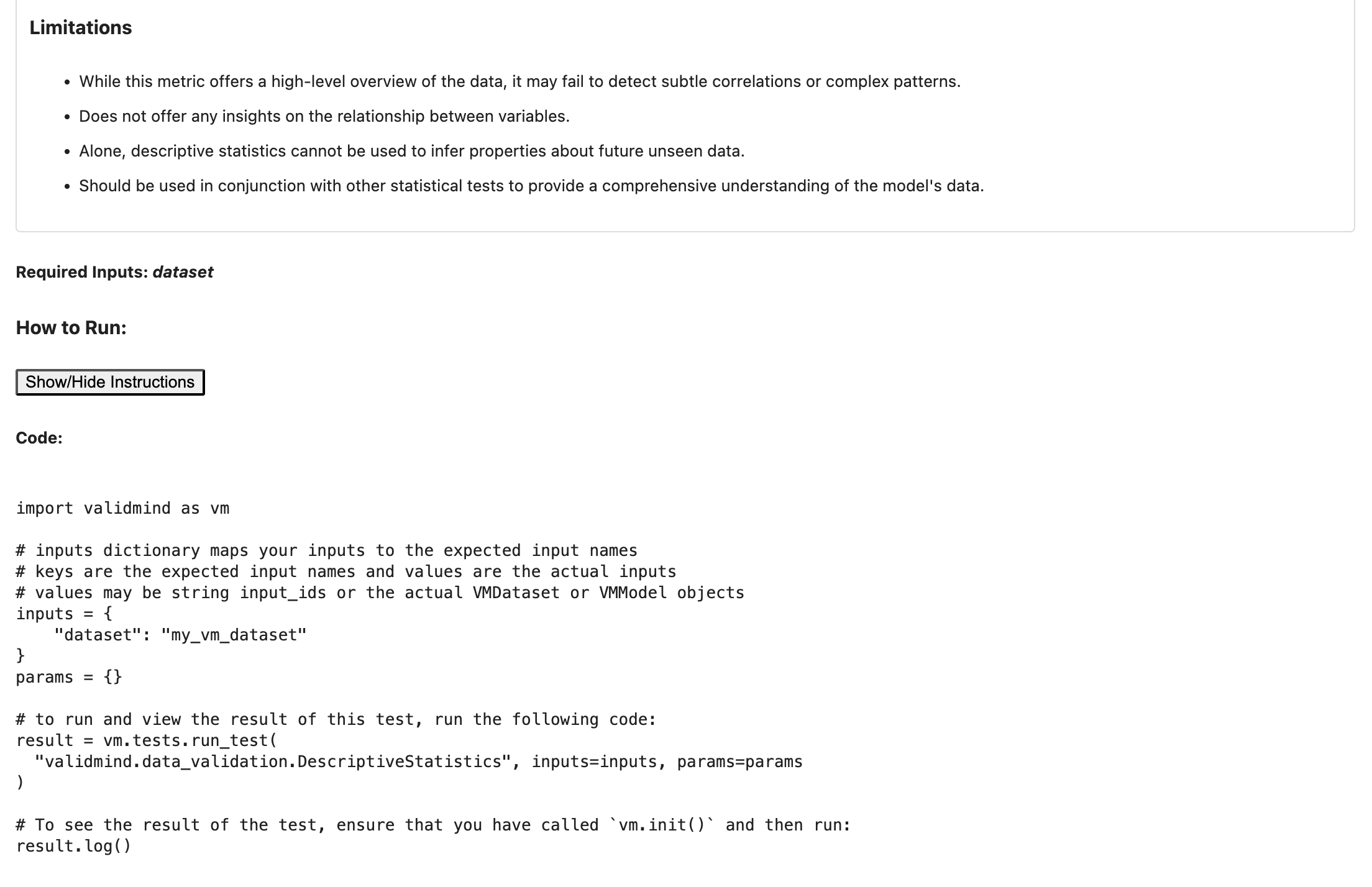

vm.tests.describe_test("validmind.data_validation.DescriptiveStatistics")

Next steps

Now that you’ve learned how to identify ValidMind test suites relevant to your use cases, we encourage you to explore our interactive notebooks to discover additional tests, learn how to run them, and effectively document your models.

Check out our Explore tests notebook for more code examples and usage of key functions.

Discover more learning resources

We offer many interactive notebooks to help you document models:

Or, visit our documentation to learn more about ValidMind.