Running Data

Quality Tests

Validator Fundamentals — Module 2 of 4

Click to start

Learning objectives

“As a validator who has connected to a champion model via the ValidMind Library, I want to identify relevant tests to run from ValidMind’s test repository, run and log data quality tests, and insert the test results into my model’s validation report.”

This second module is part of a four-part series:

Validator Fundamentals

Module 2 — Contents

First, let’s make sure you can log in to ValidMind.

Training is interactive — you explore ValidMind live. Try it!

→ , ↓ , SPACE , N — next slide ← , ↑ , P , H — previous slide ? — all keyboard shortcuts

Before you begin

To continue, you need to have been onboarded onto ValidMind Academy with the Validator role and completed the first module of this course:

- Log in to check your access:

Be sure to return to this page afterwards.

- After you successfully log in, refresh the page to connect this training module up to the ValidMind Platform:

ValidMind for model validation

Jupyter Notebook series

These notebooks walk you through how to validate a model using ValidMind, complete with supporting test results attached as evidence to your validation report.

You will need to have already completed 1 — Set up the ValidMind Library for validation during the first module to proceed.

ValidMind for model validation

Our series of four introductory notebooks for model validators include sample code and how-to information to get you started with ValidMind:

1 — Set up the ValidMind Library for validation

2 — Start the model validation process

3 — Developing a potential challenger model

4 — Finalize testing and reporting

In this second module, we’ll run through 2 — Start the model validation process together.

Let’s continue our journey with 2 — Start the model validation process on the next page.

2 — Start the model validation process

During this course, we’ll run through these notebooks together, and at the end of your learning journey you’ll have a fully supported sample validation report ready for review.

For now, scroll through this notebook to explore. When you are done, click to continue.

Explore ValidMind tests

ValidMind test repository

ValidMind provides a wealth out-of-the-box of tests to help you ensure that your model is being built appropriately.

In this module, you’ll become familiar with the individual tests available in ValidMind, as well as how to run them and change parameters as necessary.

For now, scroll through these test descriptions to explore. When you’re done, click to continue.

Get your code snippet

ValidMind generates a unique code snippet for each registered model to connect with your local environment:

- Select the name of your model you registered for this course to open up the model details page.

- On the left sidebar that appears for your model, click Getting Started.

- Locate the code snippet and click Copy snippet to clipboard.

Can’t load the ValidMind Platform?

Make sure you’re logged in and have refreshed the page in a Chromium-based web browser.

Connect to your model

With your code snippet copied to your clipboard:

- Open 2 — Start the model validation process: JupyterHub

- Run the cell under the Setting up section.

When you’re done, return to this page and click to continue.

Load the sample dataset

After you’ve successfully initialized the ValidMind Library, let’s import the sample dataset that was used to develop the dummy champion model:

- Continue with 2 — Start the model validation process: JupyterHub

- Run the cell under the Load the sample dataset section.

When you’re done, return to this page and click to continue.

Identify qualitative tests

Next, we’ll use the list_tests() function to pinpoint tests we want to run:

- Continue with 2 — Start the model validation process: JupyterHub

- Run all the cells under the Verifying data quality adjustments section: Identify qualitative tests

When you’re done, return to this page and click to continue.

Initialize ValidMind datasets

Then, we’ll use the init_dataset() function to connect the sample data with a ValidMind Dataset object in preparation for running tests:

- Continue with 2 — Start the model validation process: JupyterHub

- Run the following cell in the Verifying data quality adjustments section: Initialize the ValidMind datasets

When you’re done, return to this page and click to continue.

Run ValidMind tests

Run data quality tests

You run individual tests by calling the run_test() function provided by the validmind.tests module:

- Continue with 2 — Start the model validation process: JupyterHub

- Run all the cells under the Verifying data quality adjustments section: Run data quality tests

When you’re done, return to this page and click to continue.

Remove highly correlated features

You can utilize the output from a ValidMind test for further use, for example, if you want to remove highly correlated features:

- Continue with 2 — Start the model validation process: JupyterHub

- Run all the cells under the Verifying data quality adjustments section: Remove highly correlated features

When you’re done, return to this page and click to continue.

Log ValidMind tests

Every test result returned by the run_test() function has a .log() method that can be used to send the test results to the ValidMind Platform:

- When logging individual test results to the platform, you’ll need to manually add those results to the desired section of the validation report.

- To demonstrate how to add test results to your validation report, we’ll log our data quality tests and insert the results via the ValidMind Platform.

Configure and run comparison tests

You can leverage the ValidMind Library to easily run comparison tests, between both datasets and models. Here, we compare the original raw dataset and the final preprocessed dataset, then log the results to the ValidMind Platform:

- Continue with 2 — Start the model validation process: JupyterHub

- Run all the cells under the Documenting test results section: Configure and run comparison tests

When you’re done, return to this page and click to continue.

Log tests with unique identifiers

When running individual tests, you can use a custom result_id to tag the individual result with a unique identifier:

- Continue with 2 — Start the model validation process: JupyterHub

- Run the cell under the following Documenting test results section: Log tests with unique identifiers.

When you’re done, return to this page and click to continue.

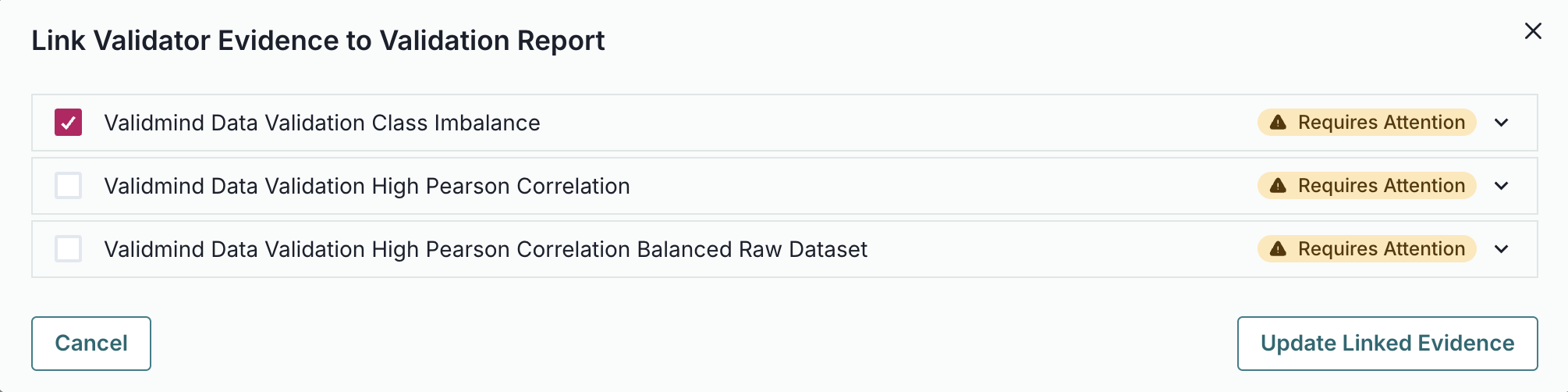

Link validator evidence

With some test results logged, let’s head to the model we connected to at the beginning of this notebook and insert our test results into the validation report as evidence.

While the example below focuses on a specific test result, you can follow the same general procedure for your other results:

From the Inventory in the ValidMind Platform, go to the model you connected to earlier.

In the left sidebar that appears for your model, click Validation Report under Documents.

Locate the Data Preparation section and click on 2.2.1. Data Quality to expand that section.

Under the Class Imbalance Assessment section, locate Validator Evidence then click Link Evidence to Report.

Select the Class Imbalance test results we logged: ValidMind Data Validation Class Imbalance

Click Update Linked Evidence to add the test results to the validation report.

Confirm that the results for the Class Imbalance test you inserted has been correctly inserted into section 2.2.1. Data Quality of the report.

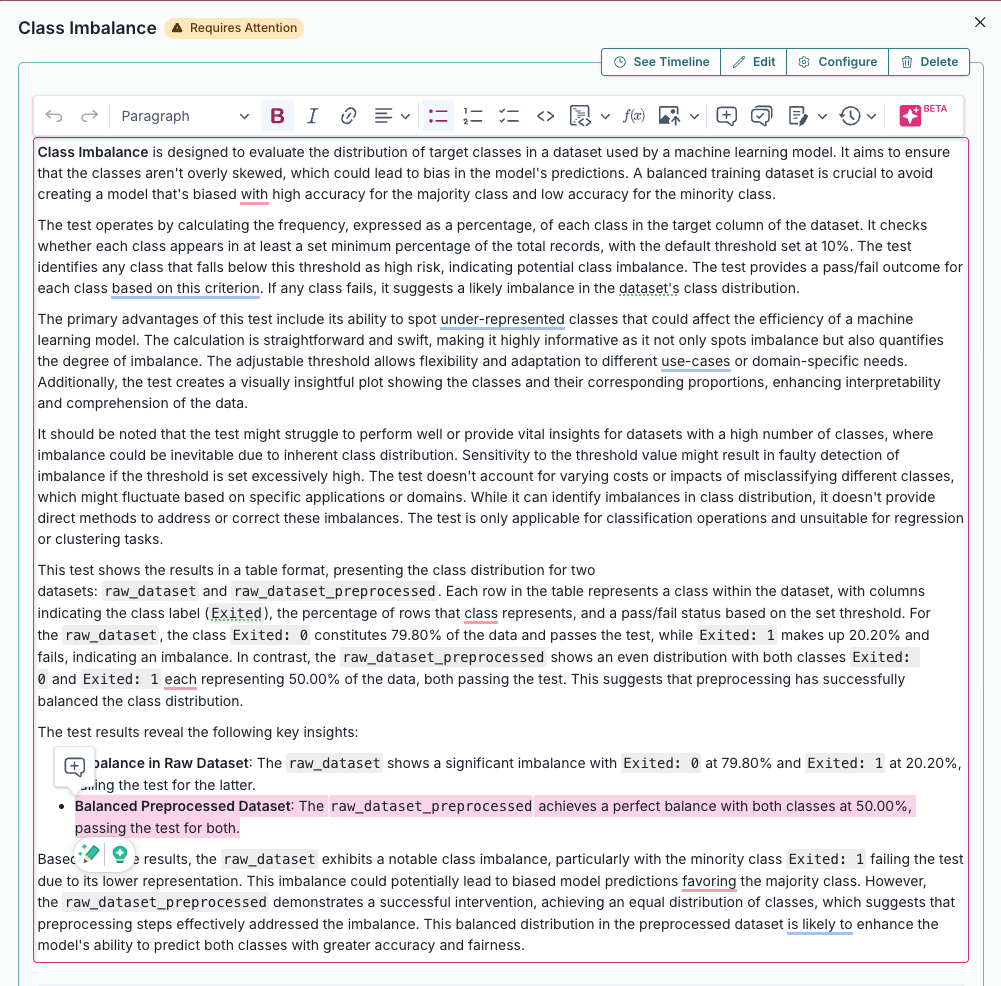

Once linked as evidence to section 2.2.1. Data Quality note that the ValidMind Data Validation Class Imbalance test results are flagged as Requires Attention — as they include comparative results from our initial raw dataset.

Click See evidence details to review the LLM-generated description that summarizes the test results, that confirm that our final preprocessed dataset actually passes our test:

Link validator evidence

- Select the name of your model you registered for this course to open up the model details page.

- In the left sidebar that appears for your model, click Validation Report under Documents.

- Locate the Data Preparation section and click on 2.2.1. Data Quality to expand that section.

- Under the Class Imbalance Assessment section, locate Validator Evidence then click Link Evidence to Report.

- Select the Class Imbalance test results we logged: ValidMind Data Validation Class Imbalance

- Click Update Linked Evidence to add the test results to the validation report.

Prepare datasets for model evaluation

Split the preprocessed dataset

So far, we’ve rebalanced our raw dataset and used the results of ValidMind tests to additionally remove highly correlated features from our dataset. Next, let’s now spilt our dataset into train and test in preparation for model evaluation testing:

- Continue with 2 — Start the model validation process: JupyterHub

- Run all the cells under the Split the preprocessed dataset section.

When you’re done, return to this page and click to continue.

In summary

Running data quality tests

In this second module, you learned how to:

Continue your model development journey with:

Developing Challenger Models

ValidMind Academy | Home