Managing

AI Use Cases

AI Governance — Module 2 of 4

Click to start

Learning objectives

“As an AI governance professional, I want to learn how to register AI use cases, conduct impact assessments, and manage lifecycle stages in ValidMind.”

This second module is part of a four-part series:

AI Governance

Module 2 — Contents

Training is interactive — you explore ValidMind live. Try it!

→ , ↓ , SPACE , N — next slide ← , ↑ , P , H — previous slide ? — all keyboard shortcuts

Use case inventories

What is a use case inventory?

A centralized registry of all AI systems and their purposes, use case inventories help you:

Understand where AI is used

Track ownership and accountability

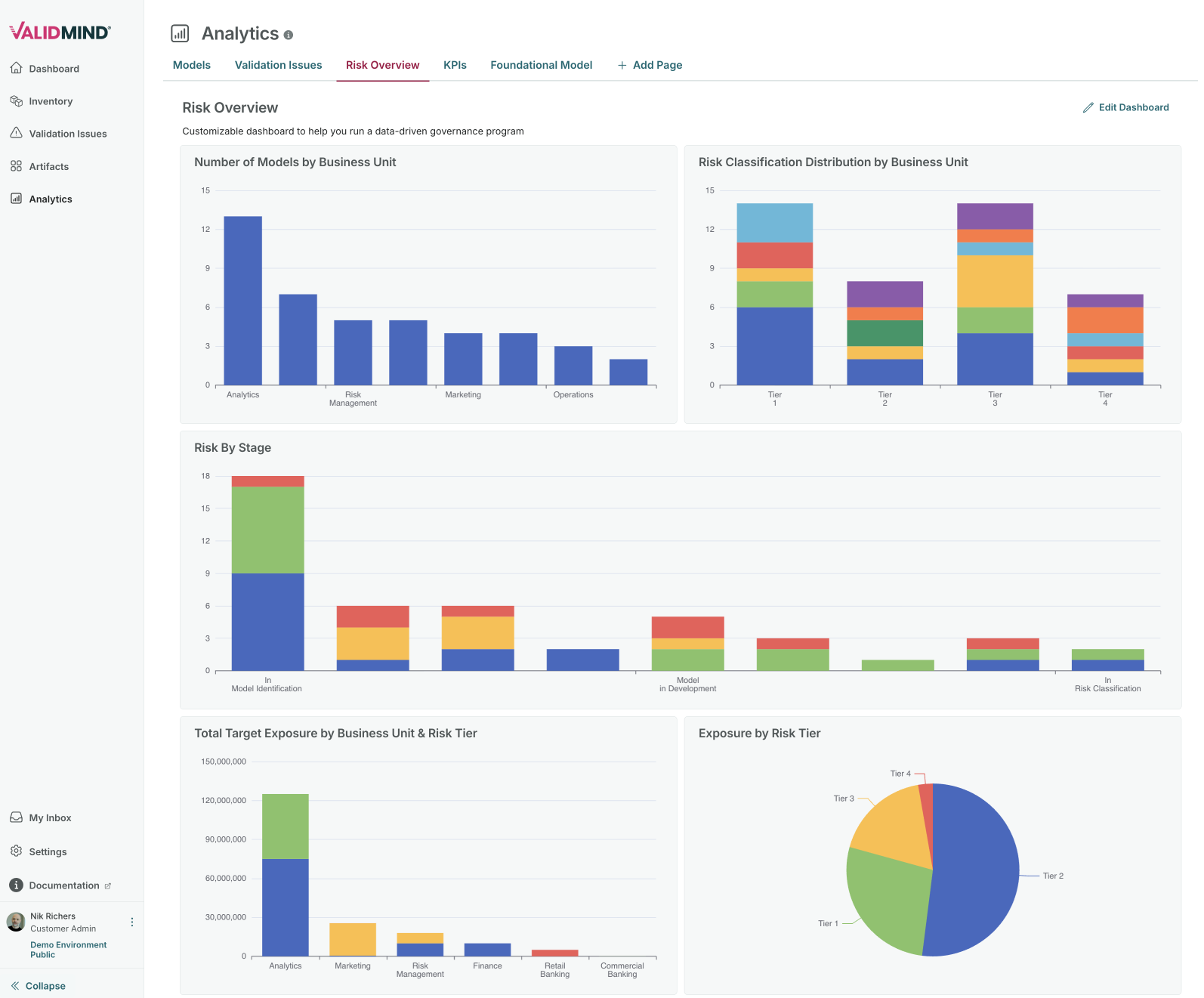

Assess aggregate risk exposure

Demonstrate governance to regulators

Inventory fields

The inventory in action

Risk classification

Why classify risk?

Risk classification enables proportionate governance. Higher-risk AI systems receive:

More rigorous review

Additional documentation requirements

Enhanced monitoring

Stricter approval gates

Classification schemes

Align your classification to relevant regulations:

| Framework | Classification levels |

|---|---|

| EU AI Act | Prohibited, high-risk, limited-risk, minimal-risk |

| Internal | Critical, high, medium, low |

| Tiered | Tier 1, Tier 2, Tier 3, Tier 4 |

Configuring risk tiers

Impact assessments

Purpose of impact assessments

Impact assessments evaluate potential risks and harms from AI deployment. They document:

Who is affected by the AI system

What decisions the AI influences

Potential for harm or discrimination

Mitigating controls

Impact assessment process

- Identify stakeholders — Who does this AI system affect?

- Assess impact — What are the potential consequences?

- Evaluate risks — What could go wrong?

- Document controls — How are risks mitigated?

- Review and approve — Governance sign-off

Recording assessments

Use ValidMind to:

Attach impact assessment documentation

Track assessment completion status

Route assessments through approval workflows

Maintain audit trail of governance decisions

Lifecycle stages

AI governance lifecycle

The AI governance lifecycle moves from intake and risk assessment through documentation and validation to a formal approval gate, then deployment, ongoing monitoring, and periodic review — with a feedback loop so systems can be re-assessed and re-approved when needed.

Managing stage transitions

ValidMind tracks AI systems through their lifecycle:

- Status fields indicate current stage

- Workflows control transitions

- Documentation captures stage requirements

- Audit trail records all changes

Next steps

Continue to Module 3 to learn about configuring AI workflows.

ValidMind Academy | Home